Kommentar: Die Zukunft der 3D-Grafik mit GPU-Computing und Raytracing

2/2English

The Future of 3D Graphics

In recent years we’ve seen tremendous interest in utilizing the immense parallel processing power of GPUs for uses beyond classic 3D graphics processing. GPUs have evolved far beyond simply implementing a fixed function graphics pipeline to becoming flexible, programmable, massively parallel computers. Similarly, 3D graphics has evolved to encompass many forms of visual computing applications. GPUs are now considered “computational graphics” engines, as many of the fixed function parts of the graphics pipeline have become programmable. And we have only seen the beginning of this transformation.

GPUs Lead Evolution to Many-Core Processing

The programmable and flexible modern GPU is one of the most powerful computing devices on the planet. Since the year 2000, the individual processing cores within GPUs have processed data using IEEE floating point precision, just like standard CPUs (aka “real computers”). The raw floating point processing power of a modern GPU is much larger and growing faster than even the latest multi-core CPU. This feature has attracted a lot of attention in the computing community. In fact, an entire field of effort has been spawned called GPGPU, or General Purpose Processing on GPUs, reflecting the desire to use the power of the GPU for broader applications than just graphics. More recently, this broader effort, which we call GPU Computing, has been made easier by the introduction of NVIDIA’s CUDA (Compute Unified Device Architecture) programming environment. CUDA allows GPUs to be programmed using the C language for non-graphics applications.

All processors evolve and change with time, not just GPUs. We are seeing this with the difficult transition that CPUs are making from single-core to multi-core. Due to problems with heat dissipation and power consumption, it is no longer possible to create faster CPUs that simply run at ever higher clock rates. However, it’s quite easy to add multiple CPU cores to a single chip -- but that’s where the simplicity ends. It is difficult for programmers to grasp how to program multi-core CPUs effectively. Also, for the first time in several decades, programmers can no longer simply wait 18-24 months for their single-threaded programs to double in speed as processor clock speeds increase. An industry wide effort to “refactor” algorithms to run on multi-core CPUs is taking place while, at the same time, the emergence of GPU Computing gives programmers a new powerful tool. Today, few application programs benefit from multi-core CPUs. In contrast, the GPU programming model aided by graphics APIs and the C programming language is straightforward and easy to use. Although applying GPUs to a variety of parallel computing tasks is a natural evolution, trying to process demonstrably parallel graphics workloads with multi-core CPUs is inherently challenging – because simply grouping together many CPUs will not produce an integrated parallel processor. A GPU consists of many parallel processor cores integrated to work together from the ground up.

Graphics processors have arguably been multi-core processors for almost ten years. In 1998, NVIDIA’s TNT product was built with two pixel pipelines and two texture mapping units. We never looked back -- the current GeForce GT200 chip has 240 processor cores. And, not only does it have 240 processor cores, but each core can run many threads, or program copies, at a time. The GeForce GT200 processes over 30,000 threads at once -- each processing pixels, vertices, or triangles! Imagine achieving that kind of parallelism and throughput with dual- or quad-core CPUs. It’s just not possible. But, that’s not all. In addition to the 30,000+ pixel or vertex threads, there are many thousands of other concurrent operations being processed by the GPU. Texture map calculations, rasterization, Z-buffer hidden-surface-removal, color blending for transparency, and anti-aliasing (edge smoothing) are all happening simultaneously. Without the special-purpose hardware included in every GPU to perform these operations, it would require hundreds if not thousands of CPU cores to match the performance of a single GPU.

Is Ray Tracing Ready for Mainstream Use?

Some blogs and press reports have commented that the future of 3D graphics will be based on the feature known as “ray tracing,” and therefore the performance of rasterization is unimportant. While we are enormous fans of interactive ray tracing (IRT), it still requires a massive amount of processing power. IRT, along with many other ideas that we are pursuing, will provide at least another decade of innovation opportunity for GPU designers. The sustainable innovation opportunity will keep the GPU industry vibrant. But rather than a “start from scratch” architectural approach, we believe we need to preserve the massive investments of all the industries that are deeply invested in OpenGL and DirectX. We believe the most valuable architectures are those that extend rather than disrupt the installed base, such as x86, Windows, HTML, and TCP/IP. Preserving these investments is important not only for maintaining the productivity of current applications and developers, but also for encouraging investment in future applications. Furthermore, ray tracing is not a panacea or really a goal in itself, but rather -- potentially -- a way to make better pictures more easily. Although some people may say that ray tracing is more accurate or “the right way,” both ray tracing and rasterization are approximations of the physical phenomena of light reflection from surfaces. Neither is inherently better or worse -- just different. Three possible reasons for adopting ray tracing are 1.) ease of programming, and 2.) faster visual effects, and 3.) the possibility of better visual effects. Let’s talk about these reasons separately.

- Ease of programming is important for 3D graphics as well as for many other applications. Ray tracing is believed to be “easier” for programmers than rasterization because ray tracing can do everything in a single unified approach. Although it is true that rays can be traced for every possible visual effect, this is not necessarily the best and fastest approach.

- Ray tracing will never be as fast as hardware rasterization for the purpose of visibility (i.e., which objects the eye sees directly). Simple visibility is not enough, however. We also would like anti-aliasing (i.e., the smoothing of edges). Ray tracing can accomplish this effect by simply tracing more rays, although this is more expensive and slower than allowing the GPU hardware to perform this task through rasterization and dedicated anti-aliasing hardware.

- One visual effect that is difficult to do well with rasterization is shadows. It is complicated to render sharp-edged shadows without having jagged edges, and there are no really robust approaches for making soft-edge shadows or the corresponding effects of multiple inter-reflections of light. These are visual effects for which ray tracing is indeed a more general solution. Also, as light reflects from each surface to shine on other surfaces, every single object in a scene is both a light source and an occlude (an object that blocks light). In order to make the “perfect” picture, you would need to trace a ray from every point on every object in every direction. A lot of rays would be required to simulate all of that light bouncing around. Although this is conceptually simple, it is more work than is practical with modern CPUs.

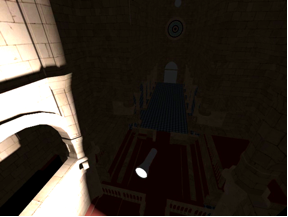

Parallel GPU hardware can trace rays as well. Making a picture that is noticeably better than what rasterization can do today is a difficult but worthy goal. That is one of the most exciting parts of the field of computer graphics -- we’re never done. There is always something more to accomplish. The picture of the cathedral in this article is shown in two versions. Figure 1 could be rendered either with ray tracing or rasterization. There is no special effort involved in making shadows or correct global illumination. Figure 2 is rendered using global illumination. This is the effect of light inter-reflecting between surfaces. The back of the cathedral--behind the light--is lit by the reflection of the light off of the surfaces in front of the light. By the way, both of these images are rendered using a GPU. The bottom line is that a GPU can now make any picture that a CPU can make, rasterized or ray traced. The combination of special purpose GPU hardware and APIs (DirectX and OpenGL) with computational graphics is powerful.

The GPU Can Do It All

The old debate of ray tracing vs. rasterization has been going on as long as there has been ray tracing and rasterization. The debate has now morphed into ray tracing vs. GPUs. But, comparing an approach (ray tracing) with a device (GPU) is a funny way to express the comparison. That’s like asking which is better, fuel or automobiles? Most likely, the answer to both questions is both. Not only do GPUs perform rasterization efficiently using the conventional API-based programmable graphics pipeline, but GPU computing has the promise of performing other rendering approaches as well. It is likely that game developers, film studios, animators, and artists will prefer to take advantage of all of the benefits of ray tracing and rasterization, as well as a variety of other techniques, all at the same time. Why choose when you can have it all? Interestingly, one of the best GPGPU applications may be… 3D graphics!

About the Author

David Kirk has been NVIDIA's Chief Scientist since January 1997. His contribution includes leading NVIDIA graphics technology development for today’s most popular consumer entertainment platforms. In 2006, Dr. Kirk was elected to the National Academy of Engineering (NAE) for his role in bringing high-performance graphics to personal computers. Election to the NAE is among the highest professional distinctions awarded in engineering. In 2002, Dr. Kirk received the SIGGRAPH Computer Graphics Achievement Award for his role in bringing high-performance computer graphics systems to the mass market. From 1993 to 1996, Dr. Kirk was Chief Scientist, Head of Technology for Crystal Dynamics, a video game manufacturing company. From 1989 to 1991, Dr. Kirk was an engineer for the Apollo Systems Division of Hewlett-Packard Company. Dr. Kirk is the inventor of 50 patents and patent applications relating to graphics design and has published more than 50 articles on graphics technology. Dr. Kirk holds B.S. and M.S. degrees in Mechanical Engineering from the Massachusetts Institute of Technology and M.S. and Ph.D. degrees in Computer Science from the California Institute of Technology.

David Kirk has been NVIDIA's Chief Scientist since January 1997. His contribution includes leading NVIDIA graphics technology development for today’s most popular consumer entertainment platforms. In 2006, Dr. Kirk was elected to the National Academy of Engineering (NAE) for his role in bringing high-performance graphics to personal computers. Election to the NAE is among the highest professional distinctions awarded in engineering. In 2002, Dr. Kirk received the SIGGRAPH Computer Graphics Achievement Award for his role in bringing high-performance computer graphics systems to the mass market. From 1993 to 1996, Dr. Kirk was Chief Scientist, Head of Technology for Crystal Dynamics, a video game manufacturing company. From 1989 to 1991, Dr. Kirk was an engineer for the Apollo Systems Division of Hewlett-Packard Company. Dr. Kirk is the inventor of 50 patents and patent applications relating to graphics design and has published more than 50 articles on graphics technology. Dr. Kirk holds B.S. and M.S. degrees in Mechanical Engineering from the Massachusetts Institute of Technology and M.S. and Ph.D. degrees in Computer Science from the California Institute of Technology.

Hinweis: Der Inhalt dieses Kommentars gibt die persönliche Meinung des Autors wieder. Diese Meinung wird nicht notwendigerweise von der gesamten Redaktion geteilt.